Normalization示例

一维的输入,归一化后的输出

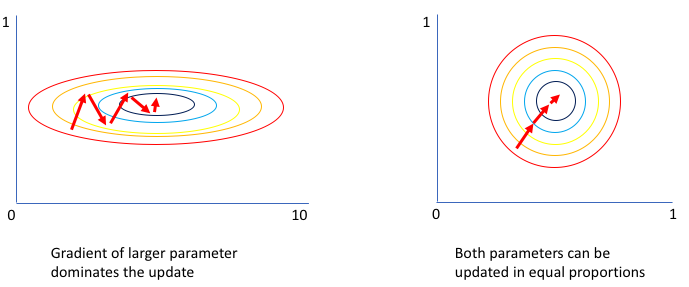

Normalization在机器学习中的应用

“药效”:加速训练收敛,让输入更“规整”,降低过拟合(overfitting),增强泛化(generalization)

Normalization v.s. Regularization

- 目标不同:

- Normalization=调整数据

- 比如:

- 比如:

- Regularization=调整预测/损失函数

- 比如:

- 比如:

- Normalization=调整数据

大语言模型引入Normalization

Normalization:“调整数据分布”

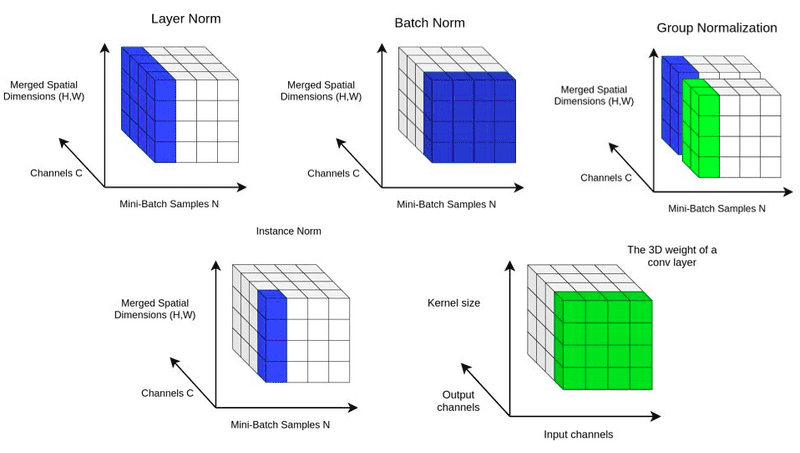

- 数据长什么样子?都是tensor!维度很多!

- 原始输入: vocab embedding

- tensor shape: <batch_size, sequence_length, hidden_dim>

- 深度学习模型中间层表示(hidden states/representations)

- tensor shape: <batch_size, sequence_length, hidden_dim>

- 原始输入: vocab embedding

Normalization案例

Normalization的设计思路

- tensor shape: <batch_size, sequence_length, hidden_dim>

- 选择最合适的Normalization维度

- batch:X=[batch_size,sequence_length, hidden_dim]

- sequence: X=[sequence_length, hidden_dim]

- hidden: <bs, seq, hidden> => <N, hidden>, X=[hidden_dim]

- 又称LayerNrom

当前流行的LayerNorm:RMSNorm

LayerNorm

- 针对每个token在hidden_dim维度上做标准化,避免依赖batch/序列长度

- 计算流程:

- 可学习参数

- 优势:序列自回归场景中激活分布更稳定,梯度更易传播

RMSNorm

- torch 2.8,提供了RMSNorm类的实现torch.nn.RMSNorm

- 原理: "regularizes the summed inputs to a neuron in one layer according to root mean square (RMS)"

如何利用torch算子自行实现RMSNorm?

手搓RMSNorm

- 输入和输出的shape: <batch_size, sequence_length, hidden_dim>

- 涉及的计算

- 平方,求和,开根

- torch提供: tensor.pow, tensor.mean, torch.rsqrt

编码实现RMSNorm

手搓

input = input.to(torch.float32)

variance = input.pow(2).mean(-1, keepdim=True)

hidden_states = input * torch.rsqrt(variance + variance_epsilon)

直接调用

layerNorm = nn.RMSNorm([4])

hidden_states = layerNorm(input)

RoPE实现

RoPE的2D实现

RoPE的n维实现

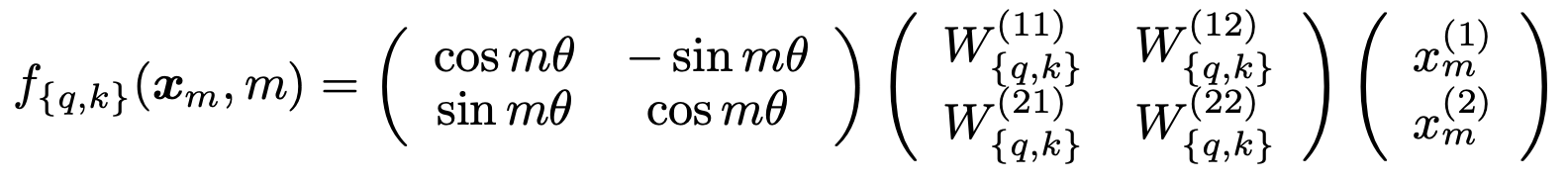

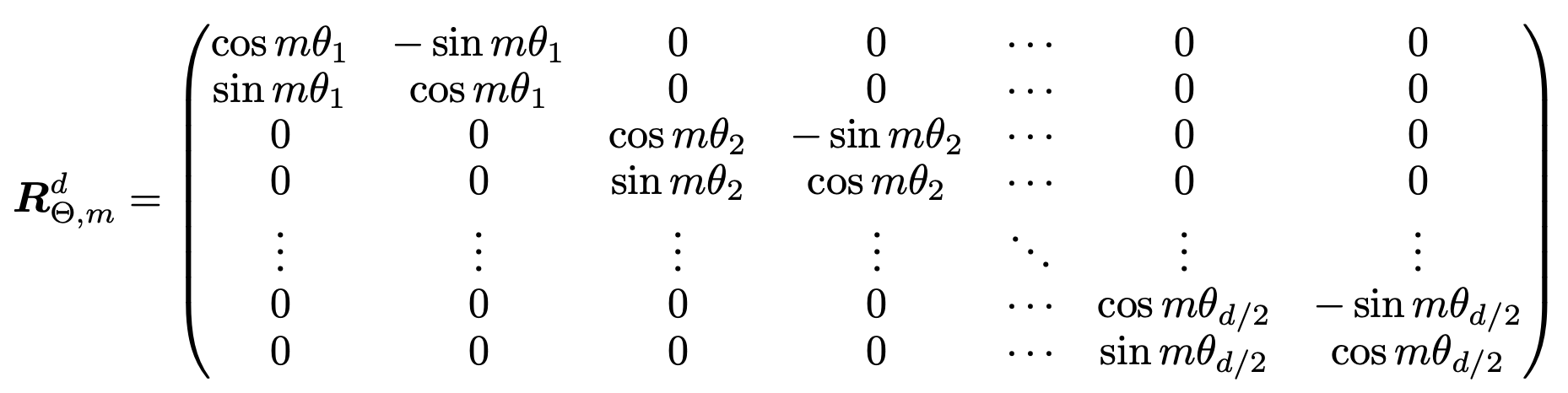

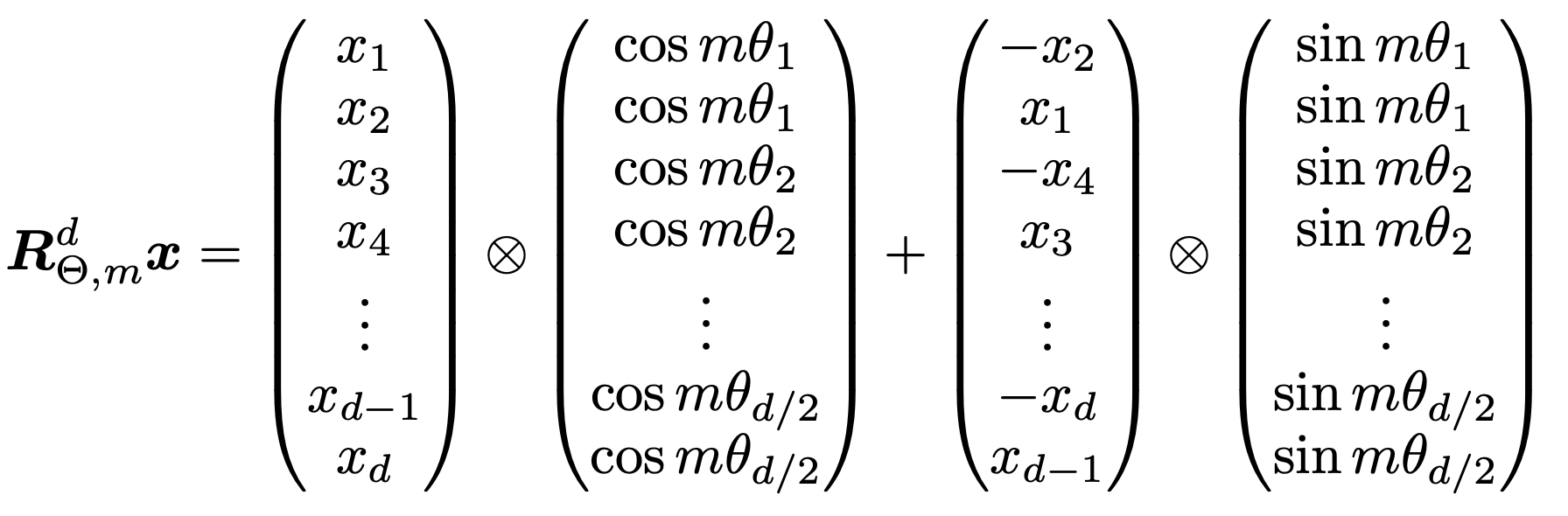

RoPE公式

- 基础频率:

- 位置角度:

- 旋转变换:

- 可写成

RoPE实现

-

目标:实现RoPE(对应的

- 准备一堆

- 准备一堆

-

构建RoPE矩阵:

- 批量算cos,再批量算sin

- 涉及torch算子

- torch.arrange, torch.sin, torch.cos, torch.outer

RoPE高效实现

RoPE高效实现

def rotate_half(x):

"""Rotates half the hidden dims of the input."""

x1 = x[..., : x.shape[-1] // 2]

x2 = x[..., x.shape[-1] // 2 :]

return torch.cat((-x2, x1), dim=-1)

def apply_rotary_pos_emb:

cos = cos.unsqueeze(unsqueeze_dim)

sin = sin.unsqueeze(unsqueeze_dim)

q_embed = (q * cos) + (rotate_half(q) * sin)

k_embed = (k * cos) + (rotate_half(k) * sin)

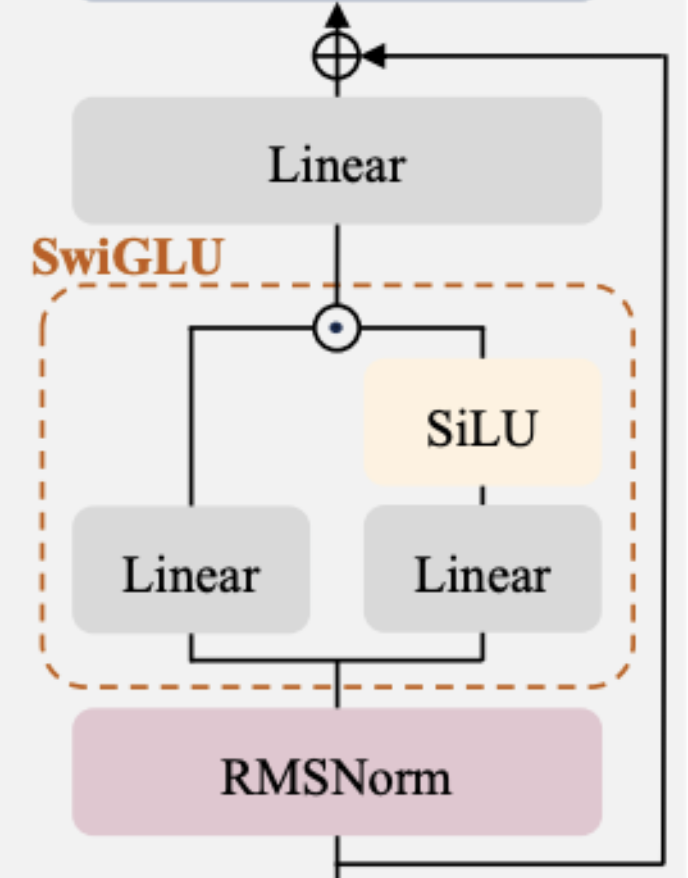

前馈神经网络(FFN)

FFN实现,LlamaMLP

(mlp): LlamaMLP(

(gate_proj): Linear(in_features=2048, out_features=8192, bias=False)

(up_proj): Linear(in_features=2048, out_features=8192, bias=False)

(down_proj): Linear(in_features=8192, out_features=2048, bias=False)

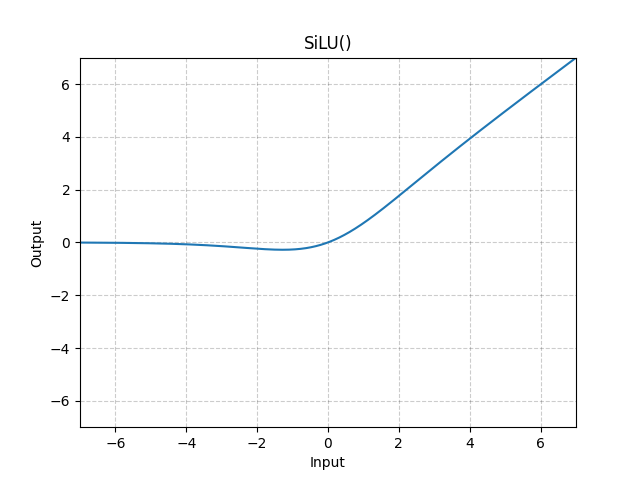

(act_fn): SiLU()

)

- 组件:三个nn.Linear层,一个SiLU激活函数

- SiLU: torch.nn.functional.silu(x)

- Linear: torch.nn.Linear(in_features, out_features)

FFN流程

- 输入tensor: <batch_size, sequence_length, hidden_dim>

- 第一步:

- 通过gate_proj获得gate tensor,经过SiLU激活得到gate tensor

- 通过up_proj获得up tensor

- 第二步:元素乘(elementwise multiply): gate tensor 和 up tensor

- 第三步: 通过down_proj获得down tensor

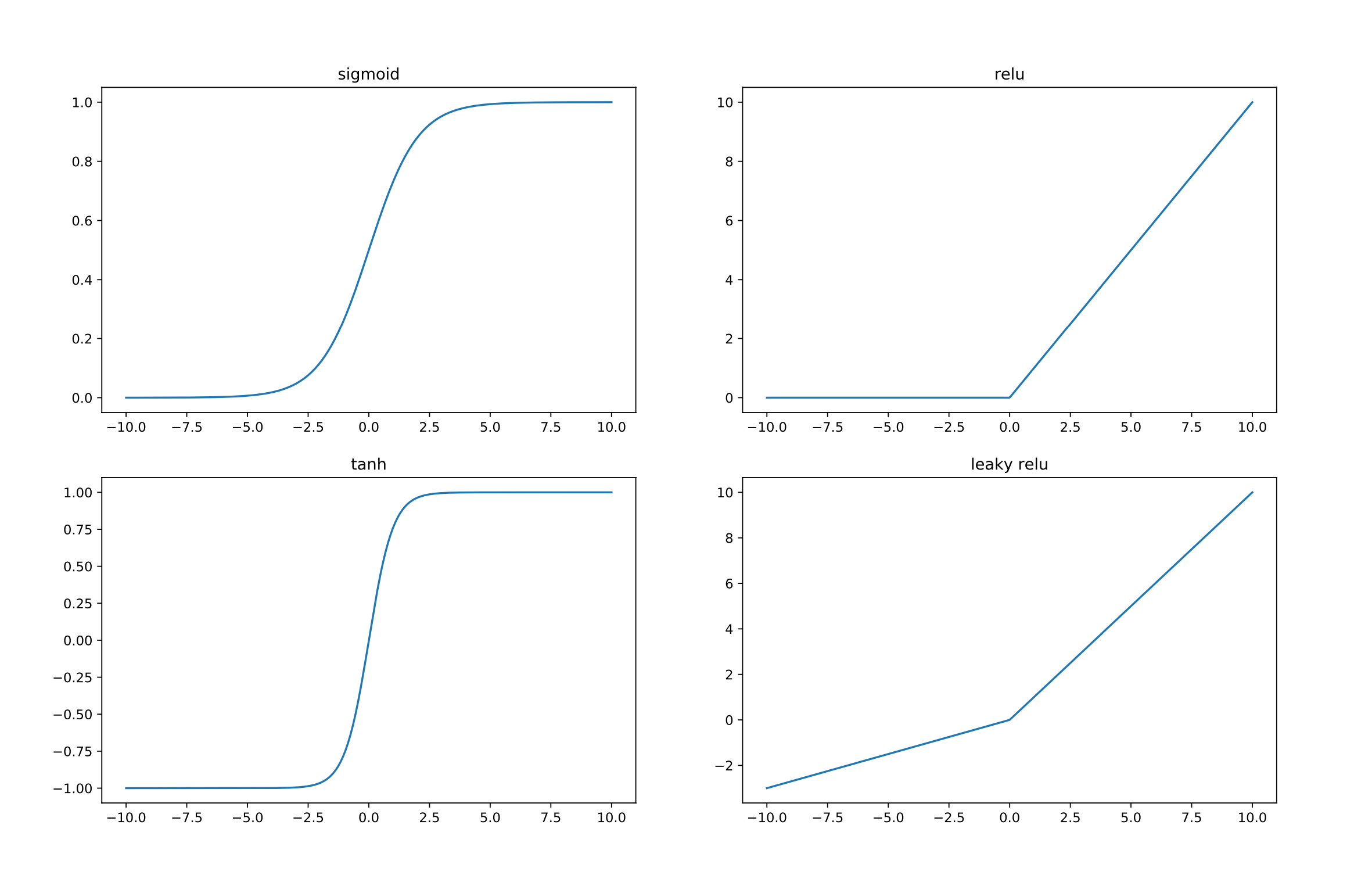

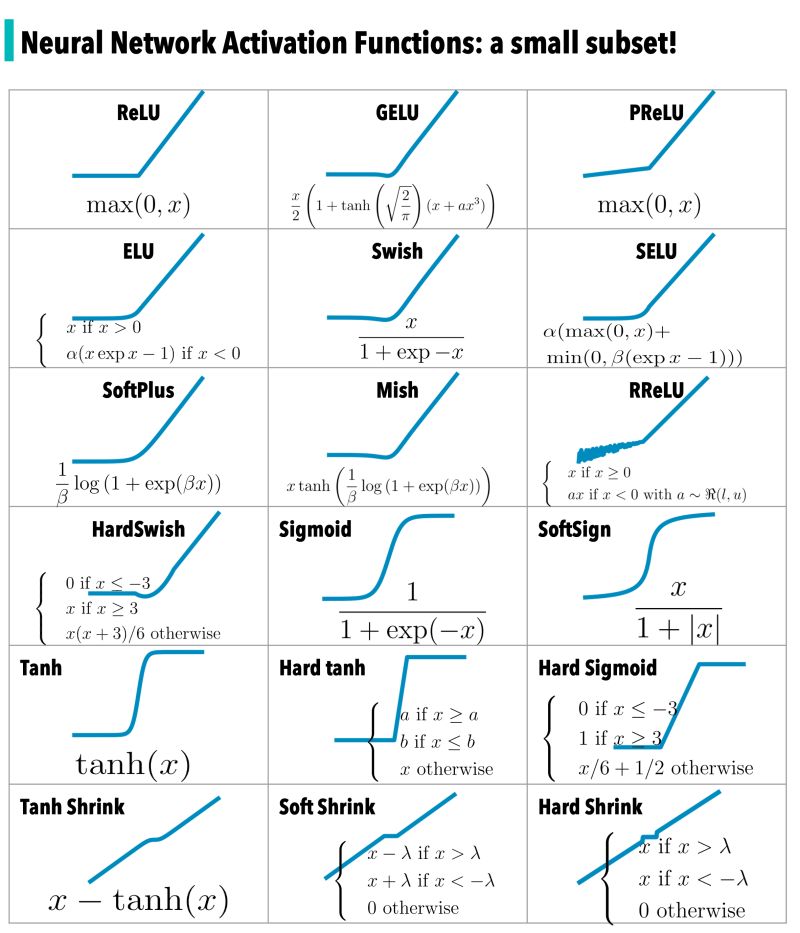

激活函数(引入非线性)

- 通过引入非线性函数(nonlinear function)可增加模型非线性的能力,在模型中中,也称之为激活函数

- 线性层:

- 激活:

- 线性层:

- activation类型

- ReLU, Sigmoid, SwiGLU, ...

经典激活函数

- Sigmoid:

- Tanh:

- ReLU:

- Leaky ReLU:

激活函数(引入非线性)

激活函数(引入非线性)

SwiGLU模块

- SiLU-Gated Linear Unit:

- SiLU函数:

- 使用两个独立线性映射

- 优势:相比 ReLU/GeLU 在 LLM FFN 中更稳定,提升表示能力但参数开销小

- LLaMA 中 gate_proj/up_proj 对应

摘抄自transformers/src/models/modeling_llama.py

down_proj = self.down_proj(self.act_fn(self.gate_proj(x)) * self.up_proj(x)

SwiGLU实现

down_proj = self.down_proj(self.act_fn(self.gate_proj(x)) * self.up_proj(x))